Decentralized GPU Networks: Bridging the Gap in AI Workloads

While AI training resides in large data centers, decentralized GPU networks find their niche in inference and daily tasks.

Decentralized GPU networks aim to provide a cost-effective alternative for running AI workloads as most AI training still takes place in large hyperscale data centers.

These networks are not equipped for the upfront training of AI systems which demands high synchronization of hardware across the internet, as logistics and latency issues arise.

However, as everyday workloads and inferences grow, decentralized networks can accommodate these smaller tasks effectively.

“What we are beginning to see is that many open-source and other models are becoming compact enough and sufficiently optimized to run very efficiently on consumer GPUs,” says Mitch Liu.

Training frontier AI models is highly GPU-intensive and remains concentrated in hyperscale data centers. Source: Derya Unutmaz

Training frontier AI models is highly GPU-intensive and remains concentrated in hyperscale data centers. Source: Derya Unutmaz

From frontier AI training to everyday inference

Frontier training is primarily handled via sophisticated data centers where resources such as Nvidia’s latest AI hardware are found.

“Building a skyscraper in a centralized data center is straightforward, but decentralization complicates matters immensely due to increased inefficiencies.” according to Nökkvi Dan Ellidason.

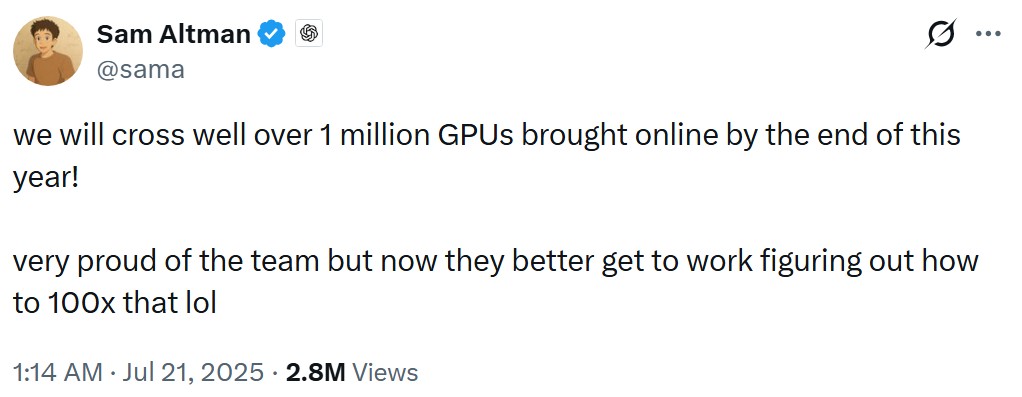

AI giants continue to absorb a growing share of global GPU supply. Source: Sam Altman

AI giants continue to absorb a growing share of global GPU supply. Source: Sam Altman

Meta’s Llama 4 model utilized extensive Nvidia resources, whereas OpenAI’s GPT-5 involved over 200,000 GPUs for its operations.

Inference has begun to dominate the demand for GPUs, with predictions stating that the majority of the 2026 market will focus on running applications based on previous training, highlighting an economic shift in computational demands.

Where decentralized GPU networks actually fit

These networks excel in environments where workloads don’t require constant coordination. Tasks such as inference, which benefit from cost efficiency and variability, are ideal for these decentralized systems.

“Today, they are more suited to AI drug discovery, text-to-image/video and large scale data processing pipelines,” mentions Bob Miles.

Decentralized units have the potential to enhance user experience globally, as networks can reduce latency through geographic proximity to users.

A complementary layer in AI computing

Although centralized frontiers remain expected, the rise in decentralized computing reflects a necessary evolution as organizations leverage more flexible workflows.

As consumer capabilities expand and open-source solutions flourish, the realm of AI continues to embrace a more distributed architecture.